Artificial intelligence (AI) has become an integral component of modern technology, powering applications across various industries. Despite this, traditional AI models often face significant obstacles derived from the limitations of their underlying hardware. These constraints are primarily attributed to the von Neumann bottleneck—a term that describes the inefficiencies arising from the separation of processing and memory units in computing systems. Researchers, led by Professor Sun Zhong from Peking University, are at the forefront of addressing this critical challenge with innovative approaches involving advanced algorithms and sophisticated memory systems.

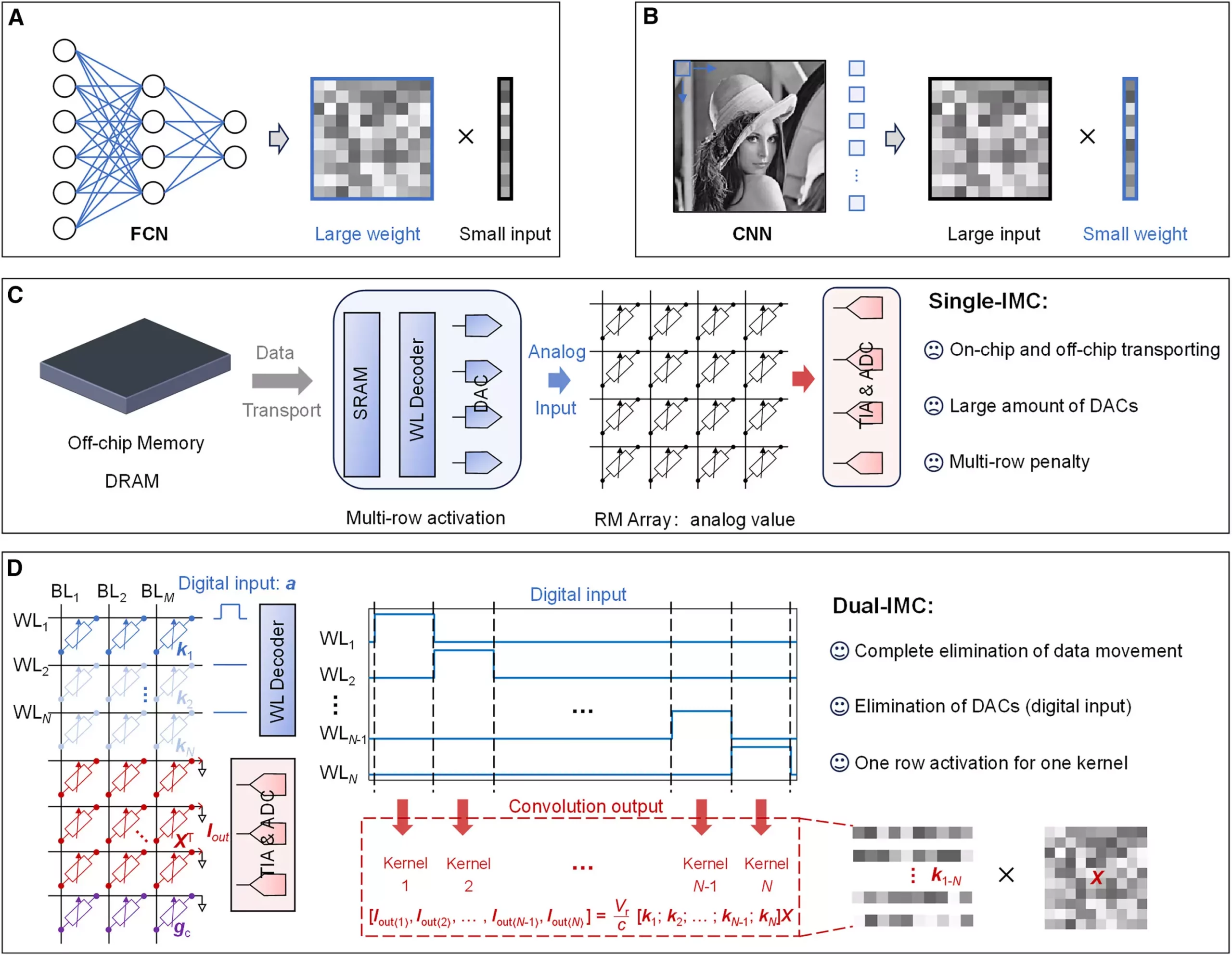

The von Neumann architecture, which has been the cornerstone of computing for decades, presents a fundamental limitation: the speed of data movement between the processor and memory. As AI applications and their corresponding datasets expand dramatically, the time it takes to transfer data becomes a growing obstacle. This issue is particularly pronounced in operations requiring matrix-vector multiplications (MVMs), which are essential for neural networks that facilitate machine learning processes. The bottleneck manifests not only as a delay in computation but also as increased energy consumption and higher costs due to the extensive need for data movement.

To combat these challenges, the conventional methodology has been the introduction of single in-memory computing (single-IMC), which permits limited processing within the memory chip itself. However, such a system still necessitates back-and-forth data transportation between on-chip and off-chip memories, along with the use of digital-to-analog converters (DACs). These elements introduce additional complexity, power drain, and space constraints on integrated circuits, hampering the overall performance and efficiency of AI systems.

Recognizing the limitations posed by traditional systems, Zhong’s research team introduced a pioneering solution—dual in-memory computing (dual-IMC). This innovative scheme reimagines how data operations are executed by allowing both neural network weights and input data to be stored directly within the memory array. By integrating all operations into a fully in-memory framework, the researchers have significantly optimized the data processing pipeline, effectively bypassing the inefficiencies of standard computing architectures.

In their recent publication, the team illustrated the efficacy of this dual-IMC approach through practical experiments using resistive random-access memory (RRAM) devices. The results demonstrate substantial enhancements in computing capabilities, especially in applications such as signal recovery and image processing.

Benefits of Dual In-Memory Computing

The advantages of adopting a dual-IMC scheme are manifold, impacting both energy consumption and operational efficiency. Firstly, the fully in-memory computations eliminate the necessity for off-chip dynamic random-access memory (DRAM) and on-chip static random-access memory (SRAM), resulting in considerable savings in time and energy.

Secondly, by effectively minimizing data movement—previously a key bottleneck—computing performance is dramatically enhanced. The removal of the constant back-and-forth transfer between different memory types leads to quicker processing times and reduced latency.

Additionally, the dual-IMC framework alleviates the need for DACs, thus lowering production costs and conserving valuable chip area. This reduction in overhead not only simplifies the manufacturing process but also contributes to a greener approach by lowering overall power requirements.

The Future of AI and Computing Architectures

As the digital landscape continues to evolve, the demand for quicker, more efficient data processing technologies is surging. The innovations introduced through the dual-IMC methodology have the potential to revolutionize both computing architectures and the field of artificial intelligence itself. With enhanced energy efficiency and computational performance, this research could pave the way for expansive improvements in various applications—ranging from complex machine learning algorithms to everyday consumer technologies.

The dual-IMC scheme represents a significant leap toward solving the enduring challenges posed by the von Neumann bottleneck. As researchers like Professor Sun Zhong and his team continue to push the boundaries of AI and machine learning, we stand on the brink of technological breakthroughs that will redefine what is possible within the vast fields of computing and artificial intelligence.

Leave a Reply