Human emotions are intricate, influenced by personal experiences, cultural backgrounds, and innate psychological factors. As we delve deeper into understanding the spectrum of human emotions, researchers are constantly seeking innovative methodologies to encapsulate and quantify these fleeting feelings. Recent advances in artificial intelligence (AI) have opened new pathways for emotion recognition; promising a future where machines can decipher human emotions more effectively than ever before using integrated approaches that combine psychology with cutting-edge technology.

The Challenge of Emotion Recognition

Emotions often defy simple categorization. They manifest in various forms and express differently across individuals, making it a considerable challenge to define them through a single lens. Traditional methods focused on verbal communication or observational techniques have proven limited. For instance, a nod of approval can mean various things depending on the context. Recognizing these subtleties requires not only refined perception but also an understanding that spans beyond mere facial expressions or vocal tonality.

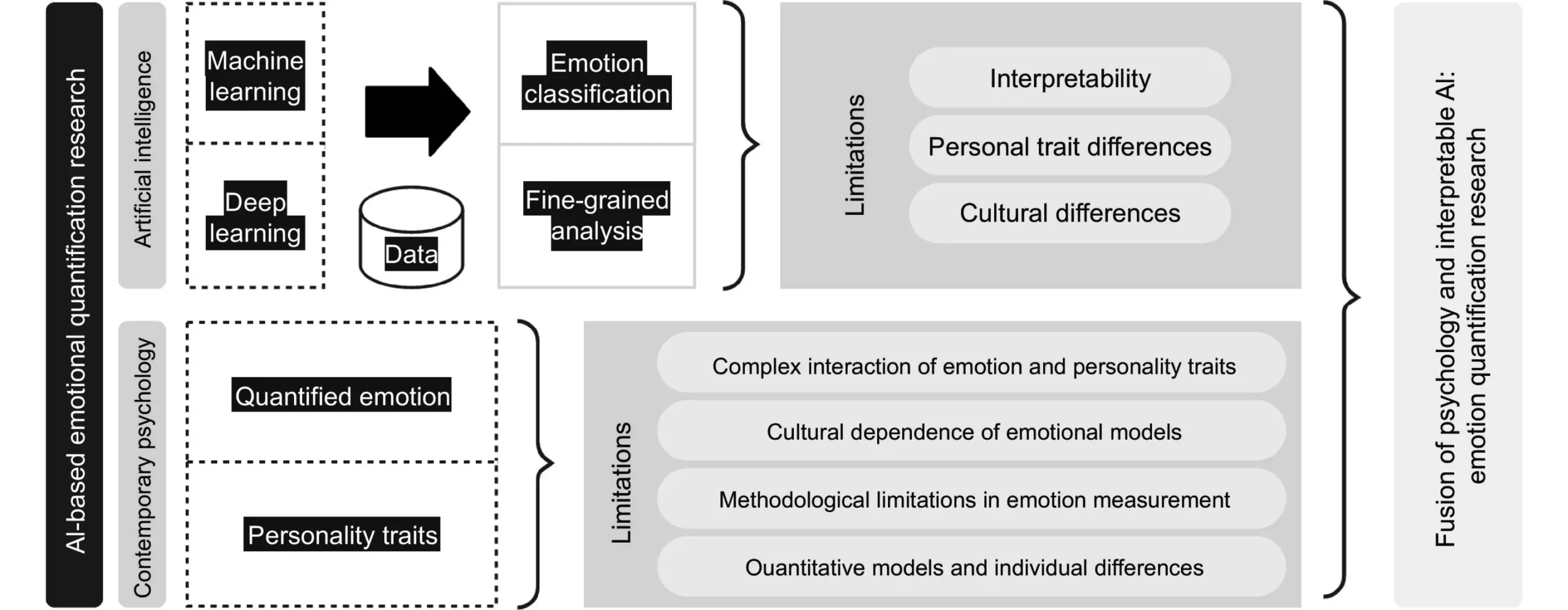

Researchers have increasingly turned to AI to bridge the gap between human emotional expression and machine interpretation. By harnessing advanced algorithms and extensive training datasets, AI can process massive amounts of data to identify patterns where humans may falter. However, the understanding of emotions requires more than a simple analytical approach; it demands an integration of knowledge from multiple disciplines, underscoring the essence of multi-modal emotion recognition.

Emotion quantification is rapidly evolving through the use of various innovative techniques. For instance, facial emotion recognition (FER) technology leverages machine learning to decode expressions, while gesture recognition captures the subtleties of body language. Furthermore, the incorporation of physiological parameters—like heart rate variability, skin conductance, and even brain activity measurements via EEG—serves to enrich the data pool from which AI algorithms draw insight.

Multi-modal emotion recognition synergizes all these techniques to form a comprehensive understanding of emotional states. By combining visual, auditory, and tactile information, researchers aim to create a holistic framework that provides a deeper understanding of how emotions function not just in isolation, but as part of a larger, interconnected human experience. This approach, emphasizing a blend of different perceptual channels, enhances the fidelity of emotion recognition models, ultimately fostering applications that are sensitive to the complexity of human emotional landscapes.

As emotion recognition technology matures, its potential applications in industries such as healthcare, education, and customer service grow increasingly promising. In healthcare specifically, AI-driven emotion quantification can facilitate significant advancements in mental health assessments. By analyzing an individual’s emotional responses over time, healthcare providers can develop tailored interventions, helping to alleviate mental health challenges without the constraints of conventional therapeutic practices. This notion of personalizing experiences extends beyond therapy, influencing user interaction in educational settings and providing a more adaptive learning environment based on student emotions.

The integration of AI in emotion quantification opens up a realm of possibility, particularly in a world becoming more attuned to mental health. By addressing emotional well-being continuously, AI can serve as a supportive entity, providing valuable insights while maintaining discretion between individuals and their emotional states.

While the technological capabilities of emotion quantification are promising, they also usher forth ethical and safety considerations that cannot be overlooked. Issues regarding data privacy are paramount. Organizations leveraging this technology must establish robust protocols surrounding the collection, storage, and handling of sensitive information. Transparency in data usage and clear communication with users will be crucial in building trust and ensuring compliance with regulatory frameworks.

Cultural sensitivity is another pivotal aspect. Emotions are often interpreted through a cultural lens, and AI systems must be designed to recognize and adapt to these differences. Without cultural competency, the effectiveness of emotion recognition could be compromised, leading to misinterpretation and reinforcing biases. Achieving success in this endeavor will require a collaborative effort among experts from psychology, AI, ethics, and cultural studies to develop AI that accurately reflects the rich tapestry of human emotions across different communities.

As we navigate this complex landscape of emotion quantification, the integration of AI within the realm of human psychology presents both tremendous opportunities and notable challenges. By embracing interdisciplinary collaboration and staying sensitive to ethical standards, we can unlock a future where AI adds genuine value to our emotional understanding, ultimately enriching our interactions within diverse contexts. As the focus on mental health intensifies globally, developing intelligent systems adept in recognizing and responding to human emotions could significantly transform lives for the better.

Leave a Reply