The ever-evolving landscape of artificial intelligence (AI) has incited groundbreaking research aimed at increasing the effectiveness and intelligence of language models. One of the recent developments in this field is an innovative algorithm from the Massachusetts Institute of Technology’s (MIT) Computer Science and Artificial Intelligence Laboratory (CSAIL) called Co-LLM. This algorithm represents a significant leap forward in fostering collaboration between general-purpose language models and specialized models.

Artificial intelligence systems akin to human cognitive functions often encounter challenges when addressing complex inquiries. Just like people reach out to peers or experts when faced with uncertainty, AI models also need a mechanism to identify when they require assistance. Recognizing the limitations of standalone models is crucial; relying solely on a general-purpose model can result in inaccuracies and incomplete responses to intricate questions.

The introduction of Co-LLM satisfies this gap, emphasizing the significance of an organic approach to collaboration between diverse AI models. This algorithm allows a general-purpose language model to dynamically seek assistance from a more specialized model when faced with tasks requiring deeper knowledge. For instance, in scenarios pertaining to specialized fields like medicine or mathematics, Co-LLM ensures that the responses are not only relevant but also accurate.

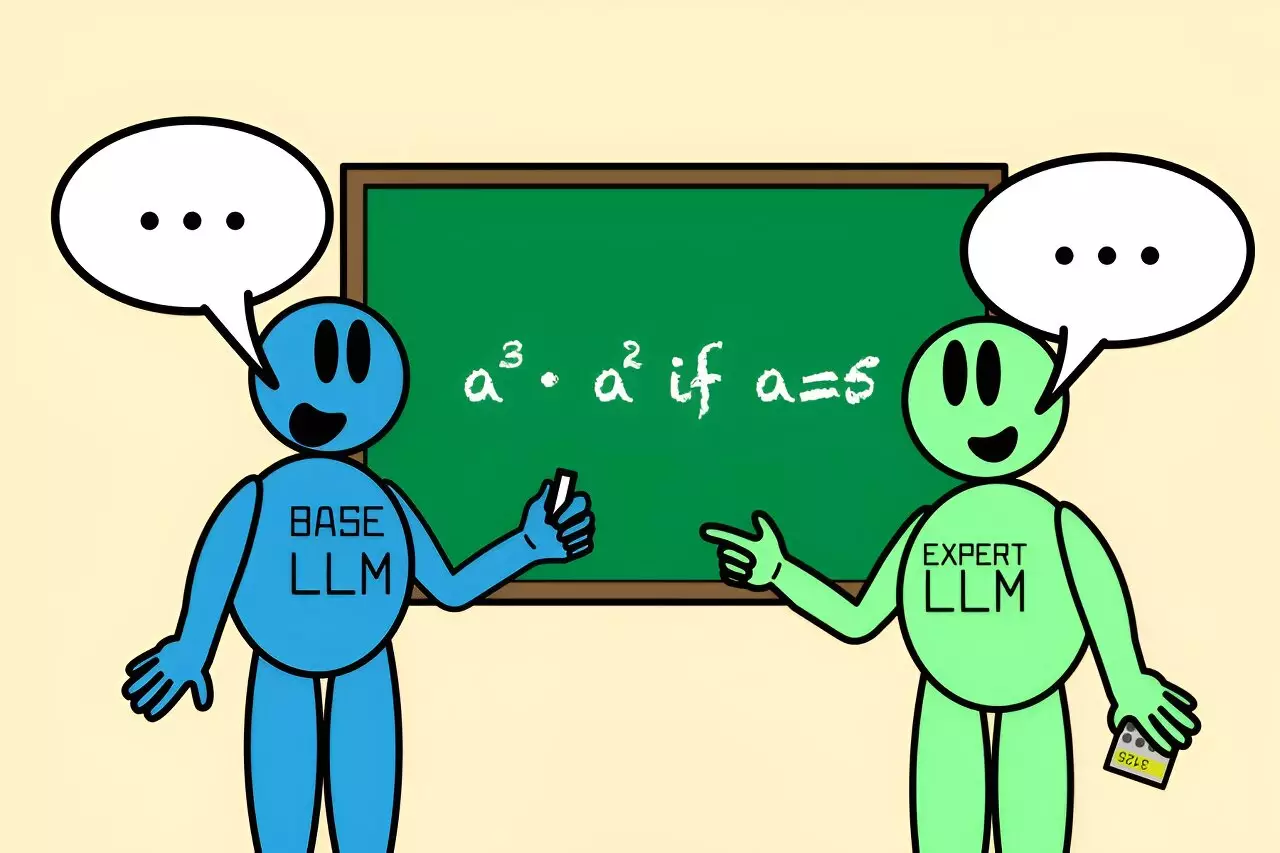

At the heart of the Co-LLM approach is a switch variable, which acts as an intelligent liaison between the two models. As the general-purpose model generates text, the switch variable evaluates each token—essentially each word or piece of information it produces—to determine if it would benefit from the insights of the specialized model. This interaction bears a resemblance to how project managers assess when it’s prudent to bring an expert into a discussion.

A portrayal of this process could involve asking the Co-LLM algorithm to identify endangered bear species. The general AI might draft its initial response, but the switch variable might recognize that it needs the expert model’s input to provide pertinent data, like the year of extinction. This collaboration bolsters the accuracy of the final answer, showcasing how a curated blend of expert and general knowledge can lead to superior outcomes.

One of the vital aspects of the Co-LLM algorithm is its foundation in machine learning. By exposing the general-purpose model to domain-specific data, researchers help it learn the areas where it struggles to provide accurate information, thereby training it to engage with the expert model effectively. This training process resembles human learning behaviors, wherein individuals gradually recognize the limits of their knowledge and know when to consult others.

The potential applications for Co-LLM span various sectors, notably in healthcare and education. For example, it can distinguish between content that requires expert verification and general knowledge. A request for the ingredients of a prescription drug could be translated from a rather vague response from a general model to a definitive answer when paired with a biomedical expert model.

Moreover, the adaptability of Co-LLM enables it to handle evolving human knowledge. The researchers at MIT are contemplating mechanisms to update the expert model seamlessly, ensuring that information remains current. By doing so, the model could lend its expertise not only in routine queries but also in developing documents or responding to emergent issues with the latest information.

What sets Co-LLM apart from traditional models is its ability to engage different models based on token-level analysis rather than requiring simultaneous engagement of all components. For example, an erroneous calculation by a general-purpose model when confronted with a math problem can be rectified by bringing in a dedicated math model within the Co-LLM framework. This targeted collaboration translates to speedier and more resource-efficient responses, as expert input is not needed for every fragment, only when the model identifies a need.

The findings from the algorithm reflect remarkable promise in enhancing precision and efficiency through intelligent model collaboration. As the methodology evolves, the prospect of refining this cooperative approach further, such as self-correction mechanisms, could train models to rebound from errors effectively. Additionally, its capability to support specialized queries while maintaining the cognizance required for general knowledge could make it invaluable across various applications, from enterprise-level document generation to academic tutoring.

Co-LLM demonstrates how collaboration between AI models can be structured in ways that mimic human problem-solving. By training models to recognize their limits and actively seek assistance, this pioneering approach signals a constructive pathway for developing more intelligent and versatile AI systems. As research continues to advance, the implementation of such frameworks promises to significantly enhance the performances of language models, ultimately leading to greater accuracy and applicability across numerous domains. The fusion of general knowledge with specialized insights could very well set the benchmark for future developments in the realm of artificial intelligence.

Leave a Reply