On August 1, a pivotal moment in the realm of technology took place as the European Union instituted its new law on artificial intelligence, known as the AI Act. The legislation aims to identify the parameters within which AI can operate, seeking to safeguard users, particularly within sectors that handle sensitive data such as health and employment. This comprehensive regulatory framework is the first of its kind globally, reflecting a growing acknowledgment among policymakers of the potential dangers inherent in artificial intelligence.

This new legislative measure not only sets forth strict guidelines for the development and deployment of AI systems but also compels software engineers to navigate a landscape fundamentally altered by legal considerations. A team of scholars, including Saarland University’s computer science professor Holger Hermanns and law professor Anne Lauber-Rönsberg from Dresden University of Technology, have begun to probe the ramifications of such a law on the work habits and responsibilities of programmers responsible for creating AI applications.

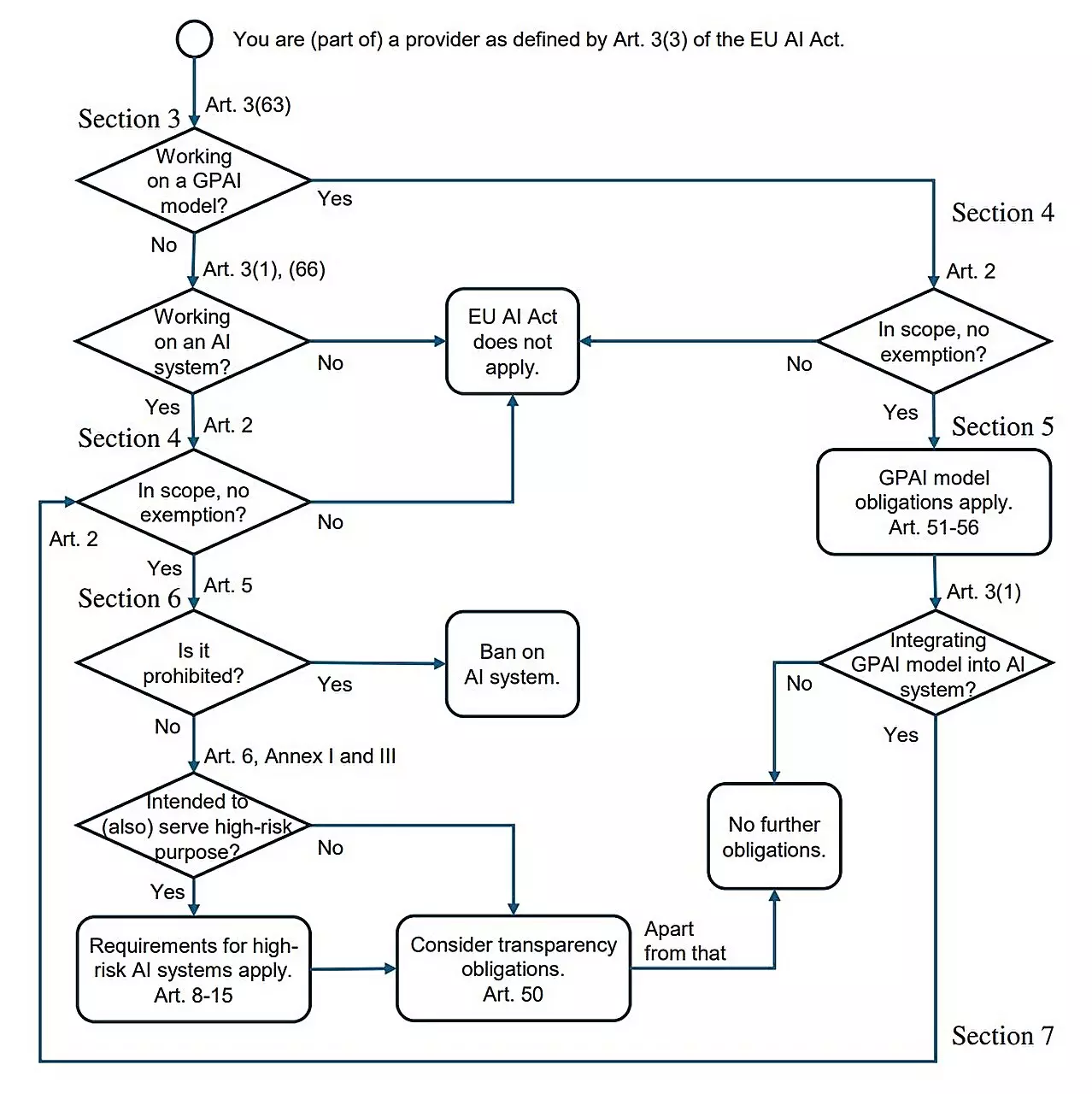

As the AI Act takes effect, a key concern among programmers revolves around how this legal framework will affect their day-to-day work. Many software developers express confusion regarding the new law’s implications, often feeling overwhelmed by the dense legal text that stretches over 144 pages. The need for clarity is crucial; hence, Hermanns’ research paper titled “AI Act for the Working Programmer” has been developed to distill essential points for those working at the coalface of AI development.

One of the report’s critical findings highlights a somewhat reassuring takeaway: for many programmers, life may proceed largely unchanged. The provisions of the AI Act will primarily affect the development of high-risk AI applications, which have significant implications for users. In contrast, simpler AI implementations, like those used in video games, face little to no impact from these regulations. The central guiding principle of the Act is its focus on minimizing the risk of discrimination and harm among system users, particularly in situations involving personal and sensitive information.

Hermanns articulates that high-risk AI systems encompass a range of applications that require stringent oversight. Notable examples mentioned include AI used in recruitment processes, credit scoring, and healthcare management. These systems are profoundly interconnected with human lives and decision-making processes, necessitating rigorous attention to ethical considerations.

The crux of the requirements imposed by the AI Act is emphasized in the paper: developers must ensure the integrity and purposefulness of the data used to train these high-risk systems. In essence, training data must be devoid of biases that could inadvertently lead to discriminatory outcomes, making error mitigation a primary focus during system design and deployment. Proper logging mechanisms to trace system decisions and operations are essential, acting analogously to aviation black boxes to enhance transparency and accountability.

Moreover, the AI Act mandates a robust documentation process, compelling developers to provide comprehensive user manuals and operational information for their systems. Such transparency facilitates effective monitoring and the early identification of potential mishaps, enabling corrective measures to be swiftly undertaken. This requirement ensures that the individuals or organizations deploying AI systems possess sufficient knowledge to oversee their operation judiciously.

While the regulations present considerable constraints, Hermanns emphasizes that the mundane aspects of software development will be largely unaffected. Routine programming tasks associated with low-risk applications will continue without the burdens of new compliance requirements.

In their analysis, Hermanns and his team take an optimistic perspective on the AI Act’s introduction. They argue that rather than hindering the development of AI technologies, the legislation may foster a culture of accountability and ethical responsibility within the tech sector. Furthermore, they contend that the AI Act ensures Europe remains not just part of the global narrative surrounding AI advancements, but also a leader in establishing standards that protect users.

Despite significant changes in legal landscapes, the AI field will maintain its ethos of innovation. As research and development activities remain largely free from restrictions, engineers and creators can continue to push the boundaries of what is possible in artificial intelligence without regulatory hindrance. The AI Act represents a bold step towards safeguarding public interest while promoting a responsible approach to technology that acknowledges both its potential and its perils.

The European AI Act is not merely an exercise in regulation; it is a historic moment that could reshape the way AI is developed and perceived across the continent and beyond. As practitioners adapt to this new reality, it remains crucial for developers to stay informed, engaged, and proactive in meeting these emerging standards.

Leave a Reply