Quantum computing stands at the threshold of a technological renaissance, promising to revolutionize industries by solving problems beyond the reach of classical computers. However, a significant hurdle remains: qubit noise, which generates errors that plague quantum computations. These errors stem from the inherent properties of quantum mechanics, where qubits—the fundamental units of quantum computers—exist in fragile states susceptible to interference. As researchers continue to dive into the potentials of this groundbreaking technology, the intersection of artificial intelligence (AI) with quantum error correction presents an opportunity that could finally make quantum computing practical for real-world applications.

The traditional bit, the foundational element of classical computing, is unambiguous, embodying either a 0 or 1. In contrast, qubits possess the remarkable ability to occupy multiple states simultaneously through superposition—a phenomenon central to their capacity to outperform their classical counterparts in computations. Yet, this very property makes them vulnerable to errors known as decoherence. Effective management of these errors is critical; thus far, quantum error correction codes have comprised the primary solution. The complexity and computational intensity of these codes raised questions about their real-world operational viability—until now.

The Role of Artificial Intelligence

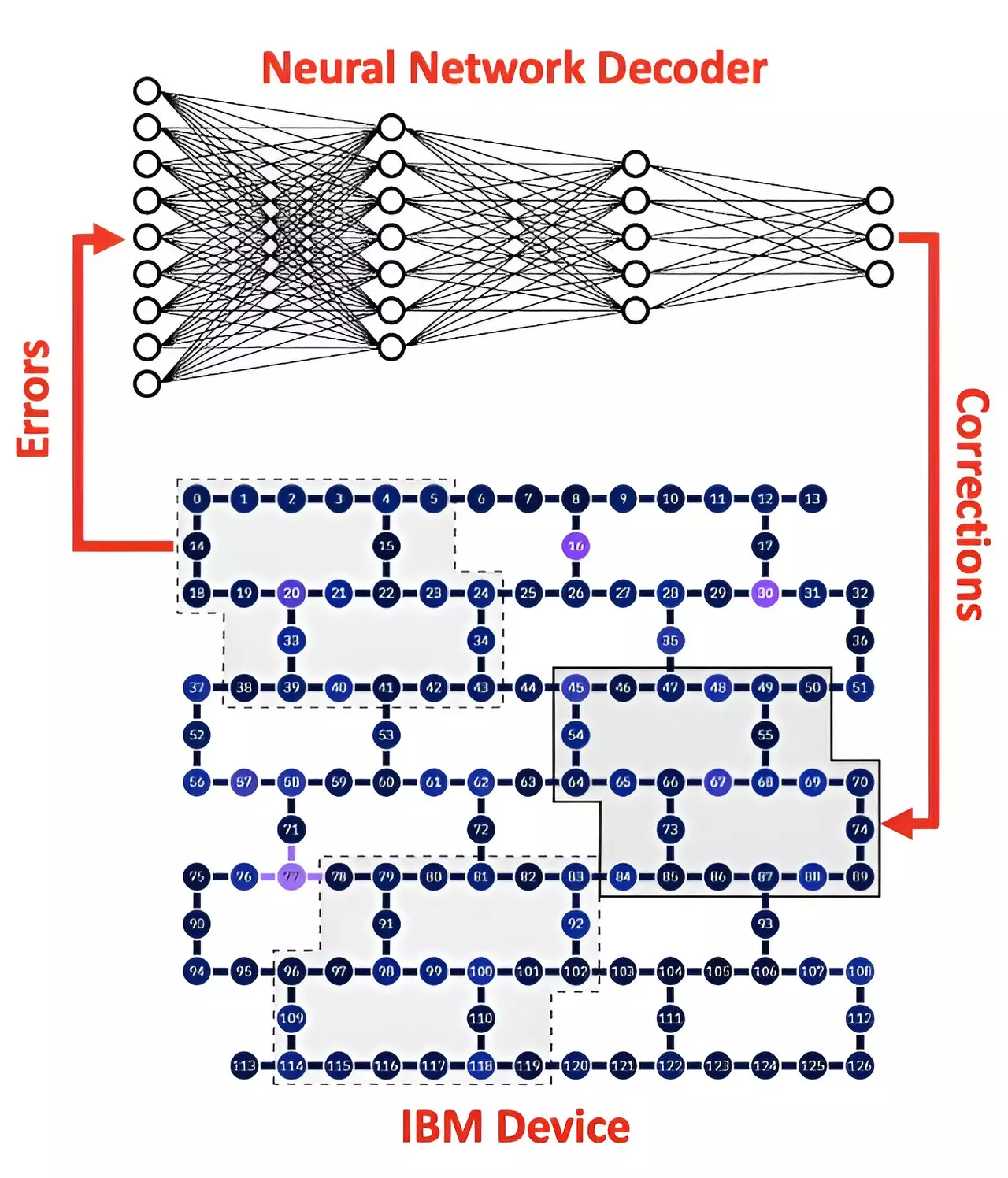

Recent research spearheaded by CSIRO, Australia’s national science agency, shines a light on how AI can alleviate the challenges posed by qubit noise. This study breaks new ground by employing a neural network designed for syndrome decoding—an intricate process of detecting and rectifying errors in quantum systems. The innovative aspect of this research lies in its application; the neural network decoder operates directly with the error data gleaned from real quantum processors developed by IBM, marking a significant leap in our understanding of quantum error mitigation.

Dr. Muhammad Usman, the lead scientist of CSIRO’s Data61 Quantum Systems Team, emphasizes the significance of this breakthrough by stating that the AI-based decoder can effectively manage complex errors that arise from contemporary quantum hardware. This not only enhances the accuracy of quantum computations but also establishes a scalable solution amidst the daunting complexity of quantum noise. Unlike traditional methods that depend on elaborate physical preparations, the neural network approach streamlines the pathological intricacies of correcting errors, potentially accelerating the advancement of quantum computing.

The Path Forward: Scalability and Fault Tolerance

The journey toward achieving full fault tolerance in quantum computing hinges on overcoming the noise challenges that qubits face as they approach operational scalability. Quantum error correction codes, designed to disperse logical information over multiple physical qubits, intend to reduce the likelihood of errors impacting overall computation. However, a significant bottleneck persists when attempts to amplify error correction codes do not yield the anticipated improvements due to pathological noise levels currently observed in existing quantum processors.

Dr. Usman’s work addresses this critical limitation by demonstrating that an AI-informed framework could ultimately enable substantial improvements in error suppression as qubits evolve in their physical reliability. By integrating machine learning processes into quantum error correction, we could inch closer to a future where quantum computers will not only solve theoretical problems but also tackle practical challenges in fields such as cryptography, materials science, and complex system simulations.

The Promise and Pitfalls Ahead

Despite this progress, it is essential to maintain a cautious optimism surrounding the advent of AI in quantum computing. While the prospects of machine learning offer an unprecedented toolkit for quantum error management, the path is fraught with potential pitfalls. For instance, as quantum processors evolve, they will require continual adaptation of the AI algorithms employed, raising questions about the durability and flexibility of such systems.

Furthermore, ethical considerations surrounding AI applications within quantum technology must also be addressed. As AI systems gain more autonomy in critical computations, designing safeguards to prevent miscalculations or biases becomes imperative. Ensuring that these technologies are not only robust but also safeguarded against unintended consequences will be vital as society embraces this profound shift.

Ultimately, the fusion of AI and quantum computing represents not only a leap toward accuracy in computation but also an ideological shift in how we approach complex problems at the intersection of disciplines. As we tread this uncharted territory, the collaborative efforts of scientists, engineers, and ethicists will shape a future where the full potential of quantum computing can be realized, ushering in an era of unparalleled innovation.

Leave a Reply